Ch.12

Distributed DBswhat is distributed?how are transactions managed?what is the architecture? |

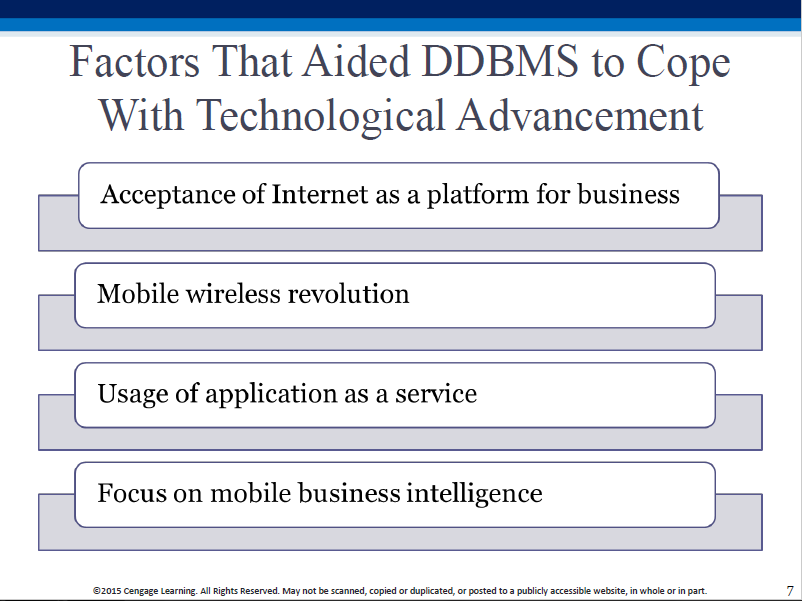

Two factors in particular, necessitated change:

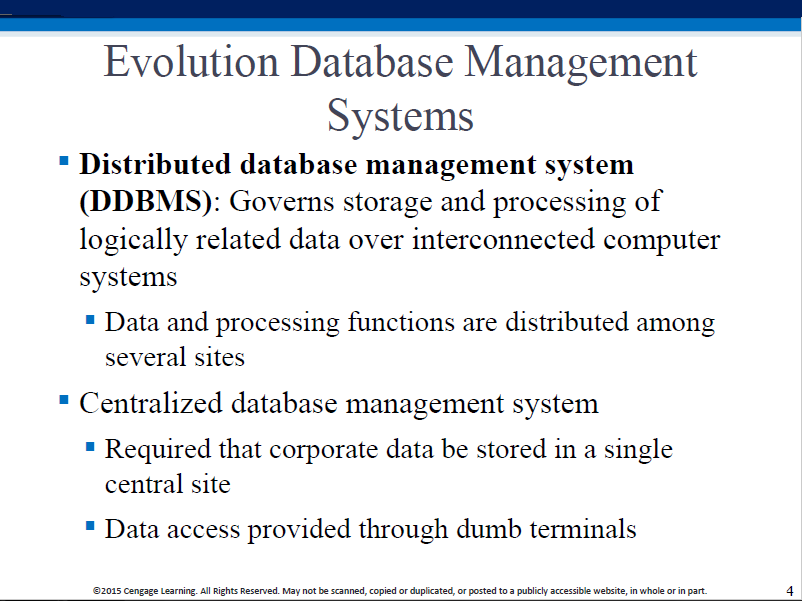

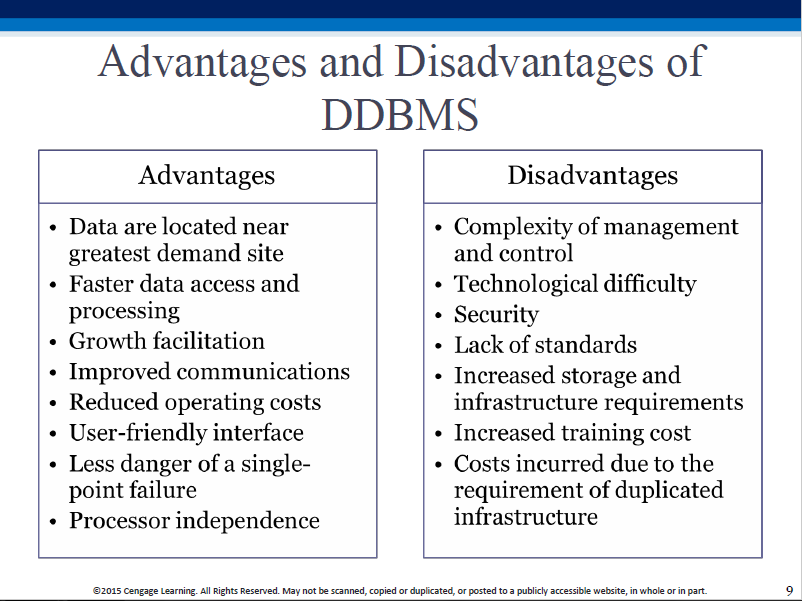

So now we have DDBMSs - distributed DBMSs.

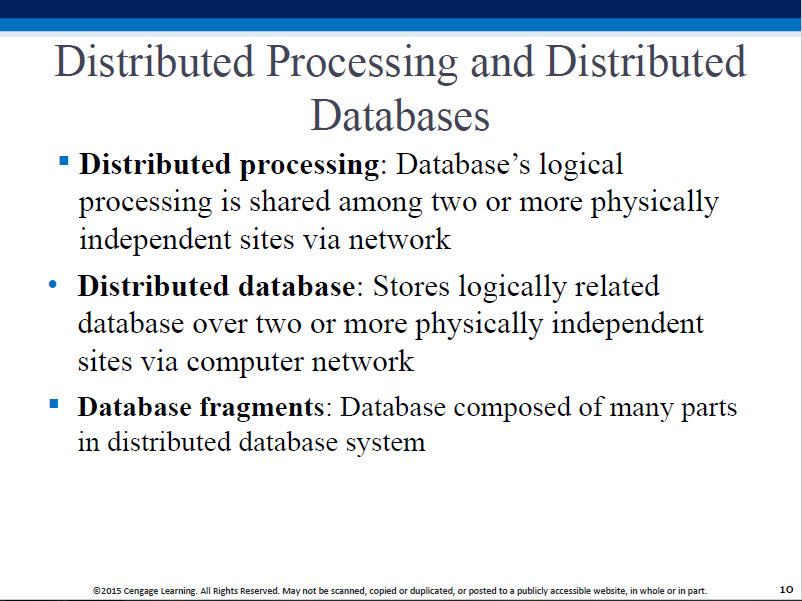

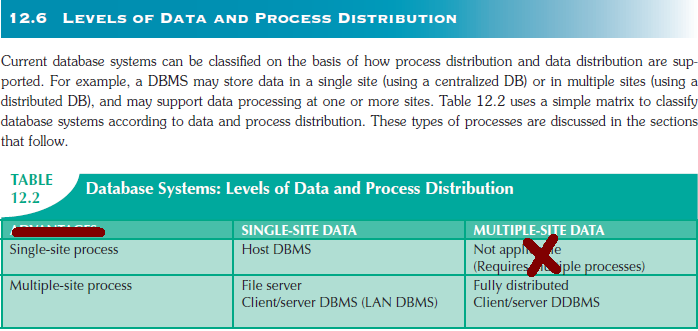

Distributed processing AND data storage -> 'fully' distributed.

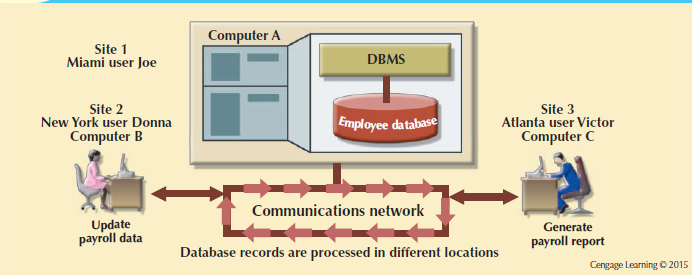

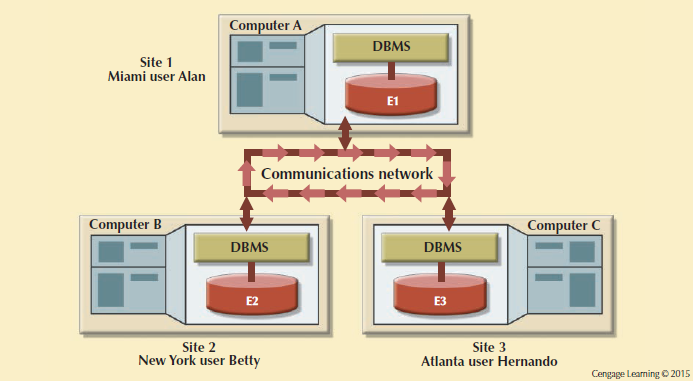

Two fragments, two sites - but each user thinks they have a single (their own) local version (transparent access).

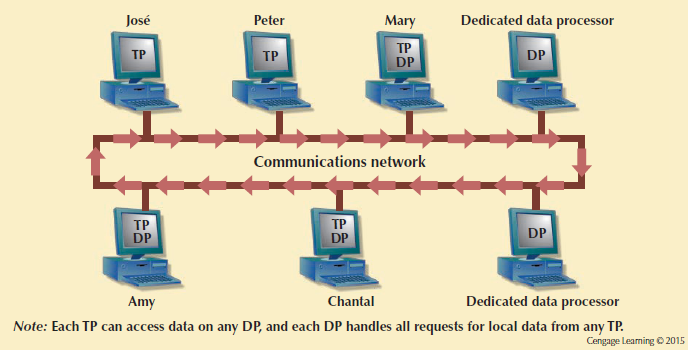

A set of protocols is used by the DDBMS, to enable the TPs and DPs to communicate with each other.

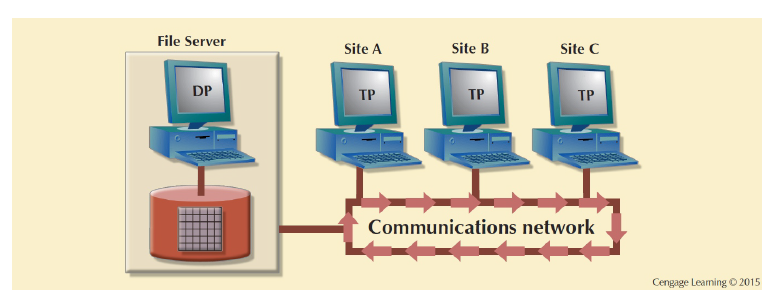

TP: Transaction Processor - receives data requests (from an application), and requests data (from a DP); also known as AP (Application Processor) or TM (Transaction Manager). A TP is what fulfills data requests on behalf of a transaction.

DP: Data Processor - receives data requests from a TP (in general, multiple TPs can request data from a single DP), retrieves and returns the requested data; also known as DM (Data Manager).

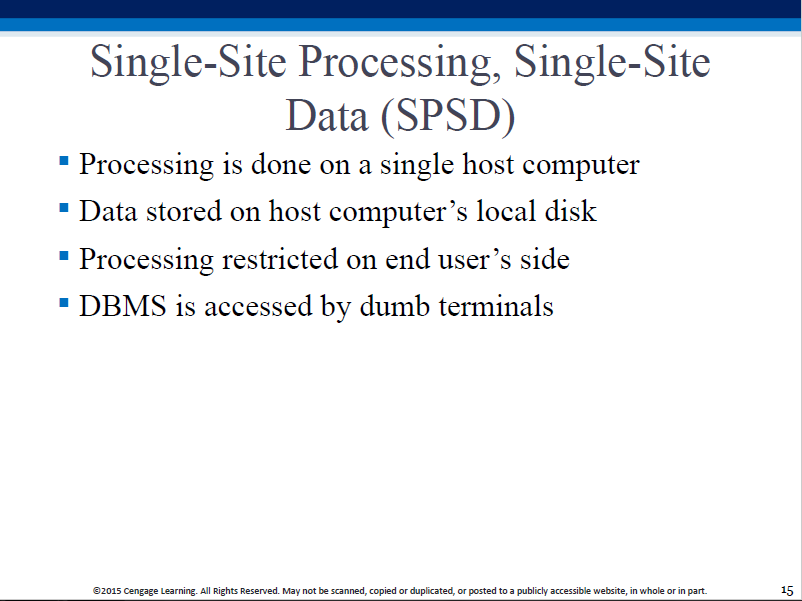

Not truly 'distributed' (data I/O is centralized).

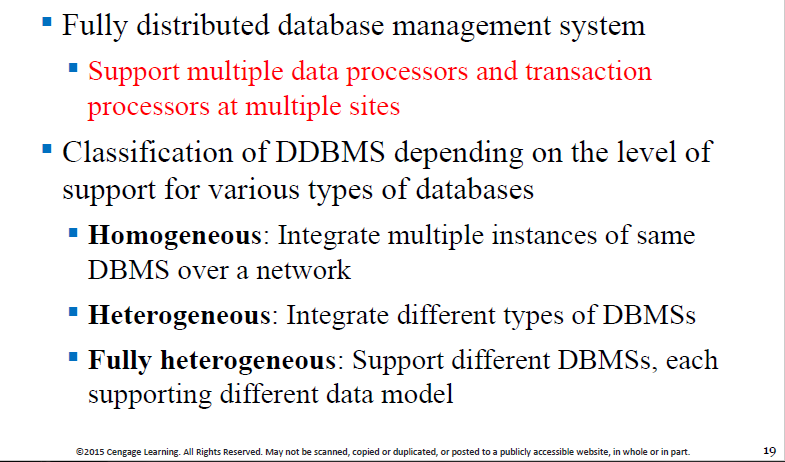

"The whole enchilada!".. Comes in three varieties.

The above schematic is same as the one you saw earlier..

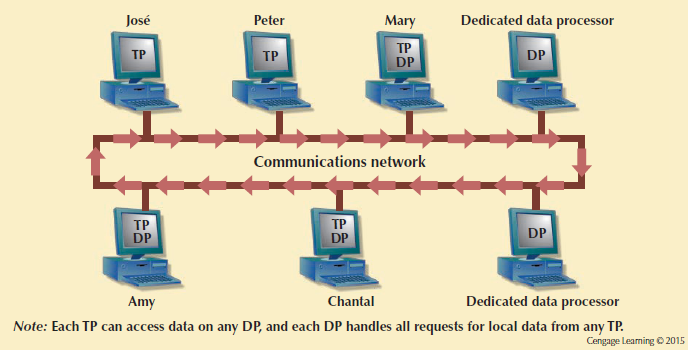

In a fully distributed database, the TPs (that request data) are distributed, as are the DPs (that serve data), like so [you saw this in an earlier slide]:

The DDBMS uses protocols to transport across its network, commands as well as data, from distributed DPs and TPs: it receives data requests from multiple TPs, collects/synchronizes data (needed by multiple TPs) from multiple DPs, and intelligently routes to multiple TPs, the data (collected from multiple DPs) they requested. In other words, since transactions need data, the DDBMS acts as a switch, shuttling and routing data, from multiple DPs, to multiple TPs.

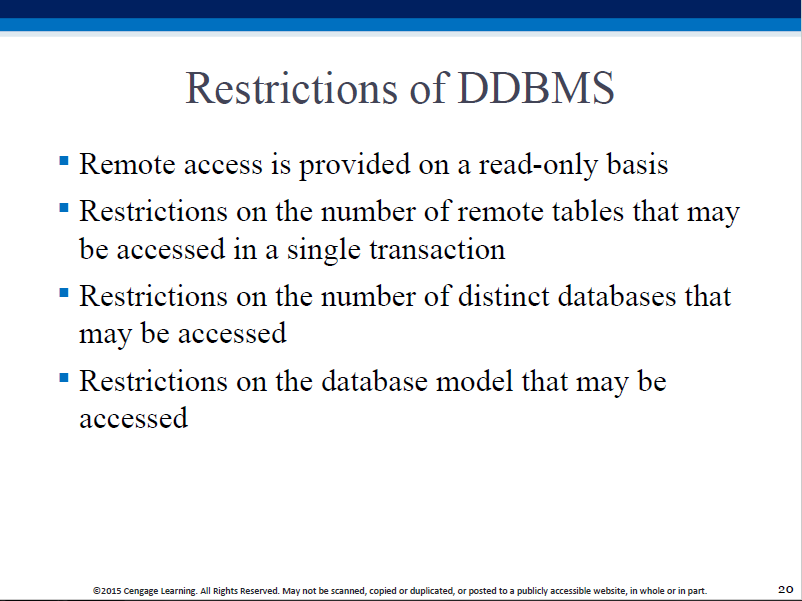

DDBMs span the spectrum from homegenous to fully heterogenous, and tend to come with restrictions.

Remote/localized requests and transactions:

Distributed (not localized) requests and transactions:

Distributed transactions are what need to be carefully executed so as to maintain distribution transparency - the transactions have to execute 'as if' they all ran on the same machine/location. This is achieved using '2PC' [explained in upcoming slides].

TP must ensure that all parts of a transaction (across sites) are completed, before doing a COMMIT.

Can't COMMIT the entire transaction, can't ROLLBACK it either! Solution: 2PC.

The following is the sequence of steps (phase 1, phase2) that are used to effect a complete distributed transaction (keep clicking on the >| button):

And here's how a transaction is aborted, when one of the participating nodes is unable to commit a sub-transaction:

In the two phase commit protocol, as the name implies, there are two phases:

The devil's in the details - all sorts of variations/refinements exist in implementations, but the above is the overall (simple, robust) idea.

It's an all-or-nothing scheme - either all nodes of a distributed transaction locally commit (in which case the overall transaction is successful), or all of them locally abort so that the DB is not left in an inconsistent state unlike in the 'Problem!' slide (in which case the overall transaction fails and needs to be redone).

FYI, this is yet another description :)

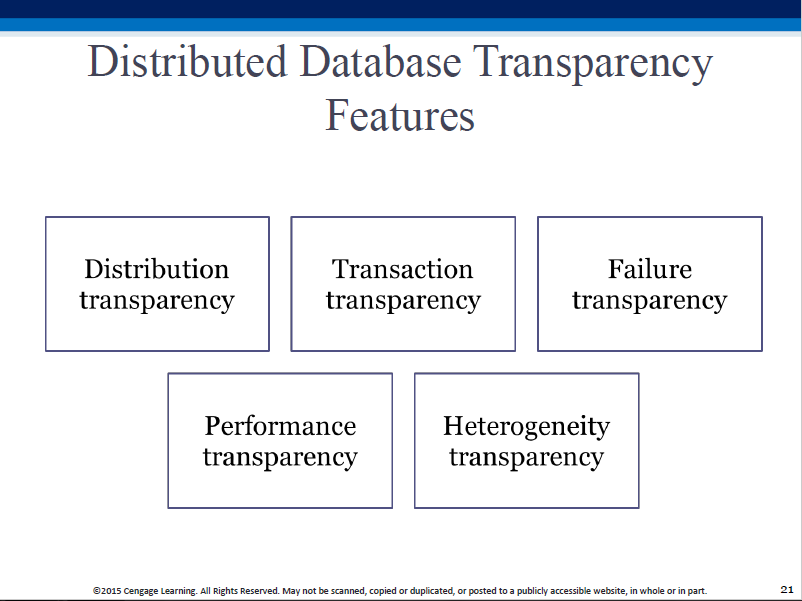

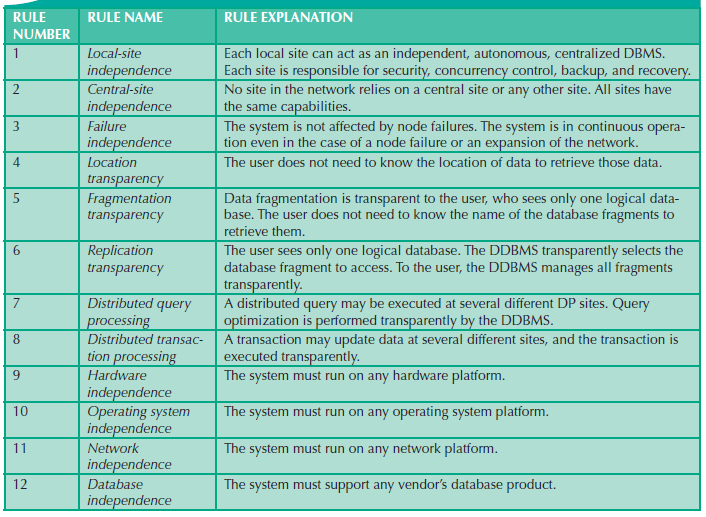

DDBMSs are designed to HIDE distribution specifics - this feature is called 'transparency', and can be classified into different kinds.

Fragmentation transparency: end user does not know that the data is fragmented.

Location transparency: end user does not know where fragments are located.

Location mapping transparency: end user does not know how fragments are mapped.

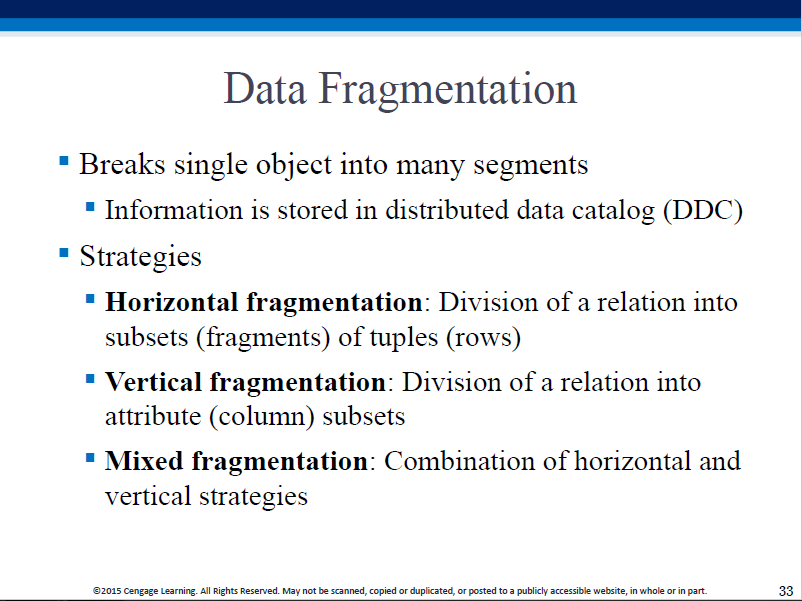

Distrib. transparency is supported via a distributed data dictionary (DDD) [aka DDC], which contains the distributed global schema which local TPs use to translate user requests for processing by DPs.

We also need to take into account, network delay and network failure ("partitioning") when planning for or evaluating transparency.

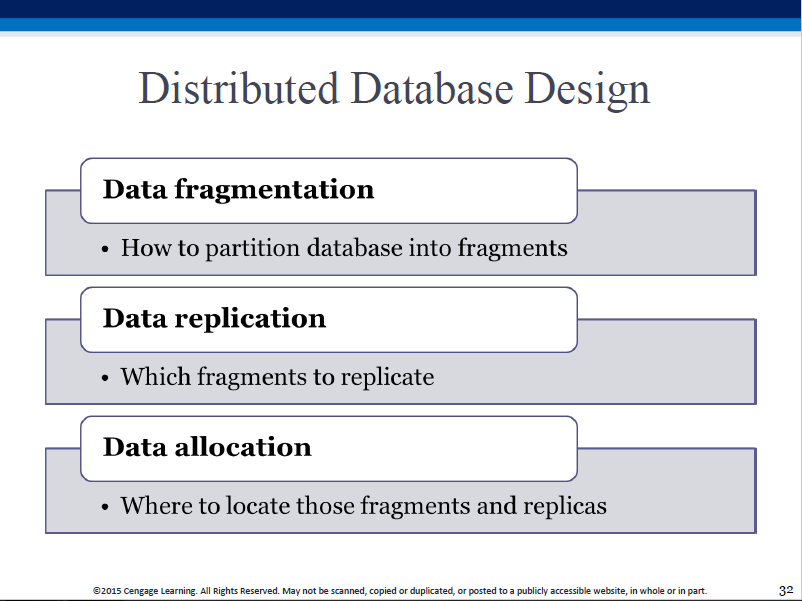

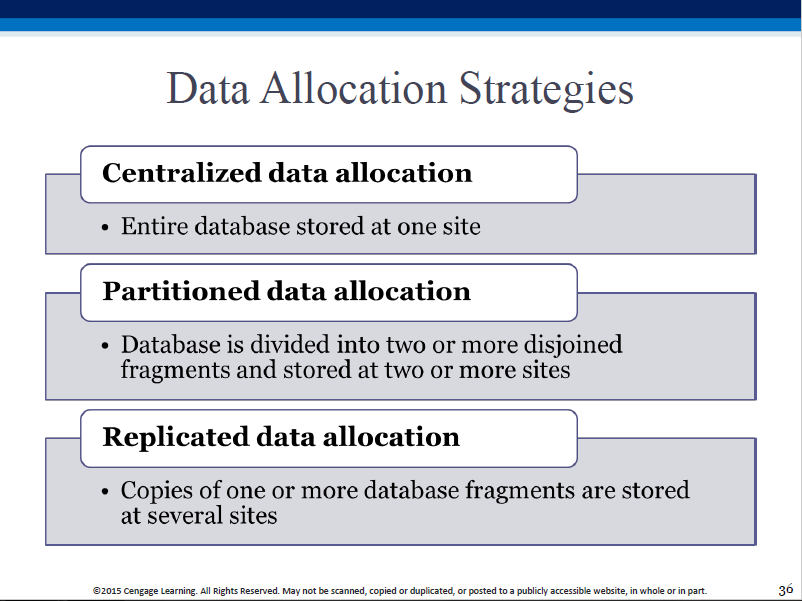

Partition (divide), replicate (copy)? Where to store the partitions/copies?

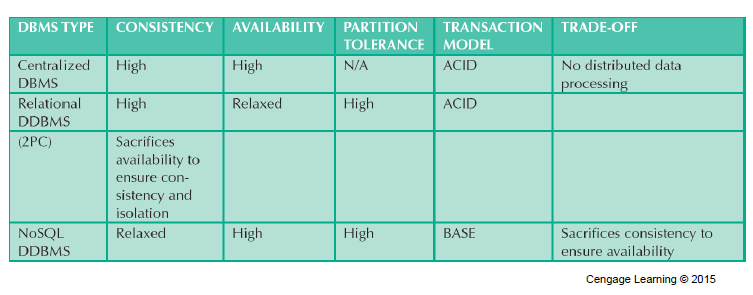

Consistency: always correct data.

Availability: requests are always filled.

Partition ("outage") tolerance: continue to operate even if (some/most) nodes fail.

The CAP theorem 'used to say' that in a networked (distributed) DB system, at most 2 out of 3 of the above are achievable [PC, CA, or PA]. But CA means low P, that means that we can't even operate, which makes CA a moot point! In other words, CA doesn't exist, ie. a low P is not an option! So now we think of it differently: in the event of a network partition (P has occurred), only one of availability or consistency is achievable.

In the 'ACID' (Atomicity, Consistency, Isolation, Durability) world of older relational DBs, the CAP Theorem was a reminder that it is usually difficult to achieve C,A,P all at once, and that consistency is more important than availability.

In today's 'BASE' (Basically Available, Soft_state, Eventually_consistent) model of non-relational (eg. NoSQL) DBs, we prefer to sacrifice consistency in favor of availability.

Think of these more as checklist items, rather than commandments.